The daily routine of front end developers has evolved quite drastically within the past few years. Having an IDE and knowing about HTML, CSS, JavaScript, and browser inconsistencies is not enough anymore if you are setting up or joining a professional project. There is a range of nifty helpers that improve your productivity as well as code quality, and can be considered essential by now.

This article will be of most value to you, if you use a Mac and:

- want to move beyond the basics and get into a professional workflow,

- are a front end dev who is trying to catch up after a pause,

- are a designer looking for more insight into the front end process,

- or are simply looking for a complete setup of tools to compare to your own.

If you’re here to get a quick overview without all the explanations, skip ahead to the summary at the end.

First things first, I can confidently tell you one sure thing. Nowadays it is unavoidable to get to grips with the command line at least a little bit if you want to work on a professional project. This is due to the above mentioned helper tools we started using to version, scaffold, process, and optimize our code to get it ready for launch as efficiently as possible. The majority of them are executed over the command line.

There are infinite combinations of tools to choose from. With time you will start to refine your set depending on what best fits your and – even more importantly – your projects’ needs. To get you up and going quickly, I am sharing with you the setup of tools I currently most often rely on in my coding routine. They are, so to speak, “the modern front end developer’s basics”.

bash.

Let’s start with the command line itself. “bash” stands for “bourne again shell”. If you type commands into the command-line interface of your choice (e.g. Terminal or iTerm), you’re talking to it. Apart from actually running the web dev tools we’ll talk about in a minute, it is generally useful to know some basic bash commands for any software you might install and use via the command line. Here’s what I most commonly do and when. If you want to, you can open Terminal and type along.

If you’re interested in mastering more commands, I recommend The Command Line Crash Course. It starts from the very beginning and only takes a couple of days.

Navigating through folders.

Alright, so open your Terminal and let’s get started.

pwdwill tell you where you are in your directory structure.cd ..will move you up out of your current folder into its parent.lslists documents and folders in the directory you are in.cdplus the name of a directory will move you into it, e.g.cd Shared.

- You can also type

cdand drag a folder from your Finder into Terminal to navigate into that folder. - Tab completion: If you start typing a directory name and push the tab key, the name will automatically be completed.

- After you execute commands, you can flip through the command history with the up and down arrow keys (this will save you a lot of typing).

Getting info about your software.

The parameters --version or -v, --help or -h work with most software that is installed over the command line and give you further info about it. For example:

ruby --version

If errors are thrown while trying to get a web project to run, there’s a good chance that you’re not using the right software version of your command line tools with the code base you’re working on.

Searching through files with grep.

This one I don’t use very often, but in the rare occasion it’s a life saver. grep is a small set of commands that let you search for a piece of text in many files and output where findings are discovered. For example:

grep -r "mytext" *

This command will find all occurrences of “mytext” in all files that are in the directory you are currently in and its subdirectories (-r stands for “recursive”).

Grep seems simple but it is powerful. It lets you perform complex searches with the help of regular expressions. That is actually what the name stands for: “Global Regular Expression Print”.

There are many more bash command variants that can be just as useful. All of them are listed in this documentation.

Versioning with Git.

Git is a version control system and in my opinion a most useful tool that should be used for every project you create, no matter how tiny. Generally speaking, it monitors the changes in your project files and saves a history of them. You can retrace all changes and jump back and forth as you like. It also enables you to work collaboratively on the same codebase by tracking who changed which lines of code and keeping a shared codebase that everybody contributes to on a server.

Doing basic operations with Git is not hard. However, the more people work on a project, the more confusing the produced history becomes and the more likely it is that conflicts occur because more than one person tries to change the same line of code. Then you are quickly in need of more advanced Git commands, which can feel like a real science.

Getting into the details of Git would be too much for this article but I do have some links for you. You can find info on how to get started with Git on the official Git page. The reference manual there will also come in handy for sure.

There are some applications that provide a GUI for Git. Hardcore command line people will probably give you a funny look but I personally use SourceTree by Atlassian. I prefer viewing file differences and the commit history in SourceTree but usually execute Git commands simultaneously over the command line.

Start simple, maybe create some test projects on Github to tinker around and see what works best for you.

Beware the binary.

One last piece of advice may also be, that Git can track changes in text files and save only those changes in its history. If you start uploading binaries like image files into it and then change those, it has to save the entire file again. Depending on how frequent you do this, the size of your Git history can become larger than you might like.

Package managers: Homebrew, npm and bower.

Software that is used to build the web ages as rapidly as everything on the web. It is quite common that a project that was started last year does not work with the current versions of its dependencies anymore. If you are working on more than one project at a time, you will run into the need of being able to use different versions of software for different projects.

Actually, wouldn’t it be great if the entire set of needed software including its versions could be defined and gathered at once?

This is where you will start being grateful for package managers. The following ones work at different levels, so they can not replace one another.

Homebrew.

Homebrew is a package manager for OS X, so it is useful for much more than front end development. As they say on their website, it “installs the stuff you need that Apple didn’t”, for example Node.js. A complete list of available packages can be found on Search Brew.

Homebrew will load and install a software and all of its dependencies with the single command

brew install [package]

It saves the software it installs into a specially created directory named Cellar/ and then symlinks into /usr/local.

You can install multiple versions of a software in parallel with Homebrew. By using switch the symlink is updated to whichever version is desired and therefore lets you jump back and forth as needed.

brew switch [package] [version]

More on how to work with Homebrew can be found in the documentation.

npm.

Stands for “node package manager” and has a set of packages that are based on Node.js available. You can install them with all needed dependencies via

npm install [package]

The most popular packages include yo, Grunt, and bower. These make up the Yeoman workflow for web projects, which we will look at in a second. But there are also many other packages that you will want to use in your projects. You can even make your own (and even private ones).

The packages can be installed locally, meaning there is not a single global version on your computer, but each project has its own set in its working directory. To keep track of the packages you are including in your project, there is a file named package.json in which all packages and versions are defined. If somebody new joins a project and is given this file, they can install everything defined in there by executing

npm install

Usually, you would keep package.json in your Git repository while leaving all the actual modules out of it. This keeps your repository slim and up- and downloads to a minimum.

The documentation of npm can be found here.

bower.

bower is explicitly a package manager for the web, installed via npm, and part of the Yeoman workflow, which comes up next. Its packages are JavaScript libraries and frameworks such as jQuery, backbone, and Modernizr. You can search through them here or over the command line with

bower search [package]

Similar to npm, the setup of required packages is defined in a json-formatted file named bower.json which is usually the only part that is kept in a Git repository. Everything stated in there is then loaded into your project through

bower install

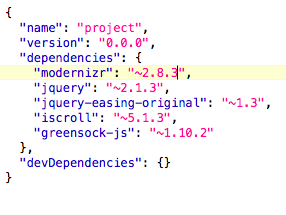

This is how bower.json typically looks like.

Nice! Here’s a list of all bower commands.

Scaffolding with Yeoman’s yo.

Alright, we are pretty deep into it by now. Conveniently loading JavaScript libs into a project with bower is great. But automatically setting up your project directory structure and basic file templates to get started is even better. This is where Yeoman’s yo comes into play.

With the yo command line tool (which is also installed using npm) and one of the many Yeoman generators that are available, you can scaffold – meaning to build a base structure for – any type of web project. This ranges from simple webapps to Angular, Laravel, or Meteor projects.

If you can’t find a generator that suits your needs you can create your own.

First you globally install the needed generator, for example:

npm install -g generator-webapp

Then – if you’re not already there – you navigate into your project directory and let the scaffolding begin by executing the generator:

yo webapp

And within just a few seconds you have a project set up. Here’s what we just created in an empty folder webapp/.

Can you spot the package.json and bower.json files we talked about earlier? Some defaults will already be set but you can always configure to your needs and update your project.

So far, we talked about two out of the three tools that are part of the Yeoman workflow: yo and bower. Keep on reading to find out what the third, namely grunt is all about. It’s definitely worth it.

Building the project with Grunt.

This one will probably have the greatest impact on the way you work on your projects. It’s a task runner. On the Grunt website, the reason to use it is stated as it “lets you automate just about anything with a minimum of effort”. What could that be useful for after we have our project laid out nicely and are ready to code? Lots! Let me explain.

The tasks that Grunt runs are defined sets of plug-ins which you can load with npm, configure in your Gruntfile.js, and then execute in order with the task-name you give them.

For example, Yeoman generators provide grunt serve to run while you’re coding. It starts a local webserver, opens a browser window and reloads the page whenever there are changes in the code base. This basically lets you see what you are programming in real time.

Running grunt will build your optimized project into a /dist subdirectory. “Optimized” can mean many things in this case. Here are some more example plug-ins you can add to the basic grunt or any other Grunt task.

- grunt-contrib-jshint checks your JavaScript code with JSHint

- grunt-contrib-sass compiles SASS to CSS

- grunt-contrib-uglify minifies files with UglifyJS

- grunt-contrib-jasmine runs your jasmine specs if you’re into TDD

And many many more. You can optimize your images, create code documentation on the fly, compile handlebars templates and what not. Here’s a list of all plug-ins. It’s probably a good idea to check if they are still updated before you use them. Quality can roughly be judged by the number of downloads. You can also create your own Grunt plug-ins.

For the sake of completeness, I would like to mention that Grunt is not the only task runner out there. Especially Gulp seems equally popular. They each have their (slight?) pros and cons which shall be discussed in a different article.

JavaScript Frameworks.

You were actually looking for something completely different when starting to read this? Hoping to learn about essential JavaScript libraries to include in your projects? Sorry to disappoint. I feel that there is no toolset in this category that can be generally recommended.

Every project has its own highly individual requirements. How vital is SEO? Are there REST services involved? Is there a lot of animation or DOM manipulation? These are some of the questions you should ask yourself before deciding on the set of JavaScript libs you are going to use.

It is important to choose wisely what to incorporate instead of following trends.

A project that might help you to make a decision on what framework will work well to generally structure your code is TodoMVC.

Summary.

Phew. This was it. You made it to the end. I hope you got an impression of what is out there that can improve your coding workflow. Let’s look at it again in a more compressed format.

We used:

- bash commands to get started using the command line.

- Git to keep track of our code changes and work collaboratively.

- Homebrew to manage software for OS X.

- npm to manage software needed to scaffold, process, and optimize our code.

- bower to pull JavaScript libraries into our project.

- yo and its generators to scaffold out a fresh project.

- Grunt for processing our code and viewing changes in real-time.

I am by far still no command line ninja but now you can probably understand why I say there isn’t a single day of writing code without me opening Terminal on my Mac anymore. It makes the web dev process way smoother and I can tell you it feels great to get this dark area on the map of my programming skills more and more under control.

What I introduced you to are the most valued and used commands and tools in my personal workflow. You’ve got a different setup, would like to add to this one, or know other ways and tricks for front enders to getting done faster? Great! Feel free to share in the comments below, I’d love to know about them!

[mc4wp_form]