Front End Performance is increasingly gaining awareness amongst developers who code for the responsive web. The problems with it show in virtually every website that goes beyond the simplest complexity. Because of excessive use of external libraries, increased calculations and DOM manipulation in the front end, and on the other side potentially slow devices with crappy internet connections, creating a great (responsive) UX calls for carefully considering the performance impacts of every asset and feature of a website.

You might already have checked out my last post Mastering Front End Performance: The Beginning in which I describe the causes of the problems that drew my attention to the topic and sparked a desire to improve the quality of the web products I build. However, I wasn’t sure where and how to start increasing performance of the code I create, as performance is not a tangible trait or problem that can be fixed like a simple bug.

So the first question to solve was: how can it be turned it into something tangible and therefore testable?

Performance is Relative

To actually make a statement about how good or bad performance is, we have to be able to somehow measure it and compare it. We need a set of defined rules that websites can be run against to be able to judge them with more than our subjective perception. Not the least to have proof for ourselves and our clients that something really is working better (or worse) than before.

Measuring Tools

Luckily, there are tools that make this possible and provide such a set of rules. At least for the optimization of the initial loading and display of a website. A couple of promising ones seem to be these web based ones.

WebPageTest

Simply copy and paste the URL of your site into WebPageTest and click “Start Test”. It takes a while, but the results are very detailed and the generated data is visualized in different ways to help you judge different aspects.

You can check out your “Speed Index” score to judge at a glance how you’re doing or dive deep into the type and order of requests. Nicolas Bevacqua gives a great intro on how to analyze the data shown by WebPageTest in his article Let’s talk about Web Performance.

One great feature he mentions as well is hidden in the “Visual Comparison” Tab you can select on the main site. You don’t necessarily have to insert more than one URL but can use it to see the different stages of your site loading or even create a slow motion video of the order in which the elements in your page load.

Why is this handy? You can use it to increase the perceived performance of your site, which can make a big difference. Making sure your user sees the site build up instead of staring at a blank space for seconds and then everything appears will not increase the time-aspect of performance, but still feel much more responsive.

Also mentioned in Designing for Performance, which is now available online for free, an important advantage of WebPageTest is that you get to pick a browser and location from which to test your site.

“Synthetic performance tools help you get a better sense of how your pages load by using a third party’s testing location and device; you can see how your site performs on various platforms across the world.” – Lara Callender Hogan, Designing for Performance

PageSpeed Insights

As mentioned above, WebPageTest seems rather slow to produce test results. It also gives you a score for “Speed Index” but what is this number good for if you have nothing to compare it to right away? PageSpeed Insights has advantages here. It seems a bit simpler but gives you quick and actionable advice on how you can improve your site’s loading performance. It also gives you a score which can be related to an overall possible score (e.g. you’ve reached 66 out of 100 possible points). It differentiates between “Desktop” and “Mobile” devices which seems a little outdated when working with responsive sites. But the output is interesting either way.

To start a test you just have to enter a URL and click “Analyze”.

Measure and Compare

A one time test might give you an impression of what major issues your website has but the real benefit of measuring performance comes with comparing it. Comparing to similar sites or sites that have a (perceived) better performance. But even more importantly comparing a site to itself – over time. Being able to improve performance by continuously tracking it.

Finding out about WebPageTest and PageSpeed Insights I was a bit disappointed at first. Not because they are not great tools or not showing relevant data but because it would take way too much time to manually check out how my performance score has changed every time I commit a change. It seems like that forces you to plan a special phase in a project for performance improvements. This phase is likely at the end which means there is not much you can do anymore without a great amount of effort.

To really get as much out of performance measuring as possible, it has to be carried out in all programming stages. Detailed improvements can only be achieved if the process of tracking performance is an effortless task that runs on the side.

So the real moment that I got excited about measuring performance was not until I found out about ways of automating it.

Automating Performance Measuring

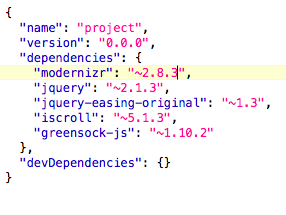

Both tools that we’ve looked at above can be run in an automated way using the task runner Grunt which you might already include in your development workflow anyways.

grunt-perfbudget

The Grunt plug-in that uses WebPageTest is called grunt-perfbudget. According to the documentation you can use “either a public or private instance of WebPageTest to perform tests on a specified URL”.

Now the performance budgets finally come into play: The plug-in works by letting you set values/budgets for various options like fullyLoaded, requests, and SpeedIndex. If the values your site produces are not within the budget limit, the task fails.

grunt-pagespeed

If grunt-perfbudget feels too slow for you to include in your build process, you should give grunt-pagespeed, which uses PageSpeed Insights, a try.

It is also possible to run PageSpeed Insights via Gulp by installing psi.

Setting Performance Budgets

The optimization of performance is a highly individual task for each website as it greatly depends on the system setup and the actually available options for improvements. In the end, it is up to us to decide when the goal is reached. To do this, it is important to develop a feeling for it.

Start by tracking the performance of the websites you build. Setting budgets – meaning maximum acceptable values for requests and timing – will get easier once you get a feeling for what has great impact on performance and what doesn’t so much.

The State of the Art

This post needs an extended conclusion. Why? I am still not too happy with the currently available tools of measuring loading performance. Both tools I introduced require deployment of a website before it can be tested. Yes, it’s the only way of really realistically measuring performance but it doesn’t exactly make it effortless “on the side” – like I was wishing for further up in the post – if you have to wait for deployment every time you make a commit. I also have legal restrictions concerning this as I am usually not allowed to deploy the code I write to the public until my client decides to launch.

I am still looking for a solution that can be run locally and would somehow be configurable to roughly simulate the production environment. The only plug-in I found that works locally is grunt-phantomas but it’s GUI is too cluttered to give an overview and there is no overall index to keep track of performance at a glance. I could invest the time to set up a private instance of WebPageTest. Or use PageSpeed Insights via a tunnel with gulp and Ngrok which seems to be destined to distort results. But overall, there doesn’t seem to be a solution yet that is easy to set up and enables to track performance at a glance without deployment.

If you have found a great way of incorporating measuring page loading performance into your workflow, please leave me a comment. I’d be glad to know about it.

Still have some question marks hovering above your head? I’m not done with the topic yet either, we’ve barely scratched the surface. There’s more to come, if you’d like to get a note when a new post has been published make sure to sign up below.

[mc4wp_form]